Homo Digitalis became an observer-member of EDRi

We are very pleased and proud to announce that Homo Digitalis is now an observer-member of European Digital Rights – EDRi. EDRi, founded in 2002, is the biggest union of digital rights organizations in the world.

Homo Digitalis is the first Greek organization to get accepted by EDRi and we feel very honoured about this. As observer-member we will have the opportunity to participate alongside EDRi and its members in joint actions, to exchange knowledge and opinions with renowned specialists from all around the globe and increase our organization’s influence.

We would like to warmly thank epicenter.works and Bits of Freedom, which supported us from the first moment with their reference letters, as well as all the EDRi members, which voted in our favour.

Learn more on EDRi and its members on: https://edri.org/members/

Homo Digitalis's first seminar was successfully completed

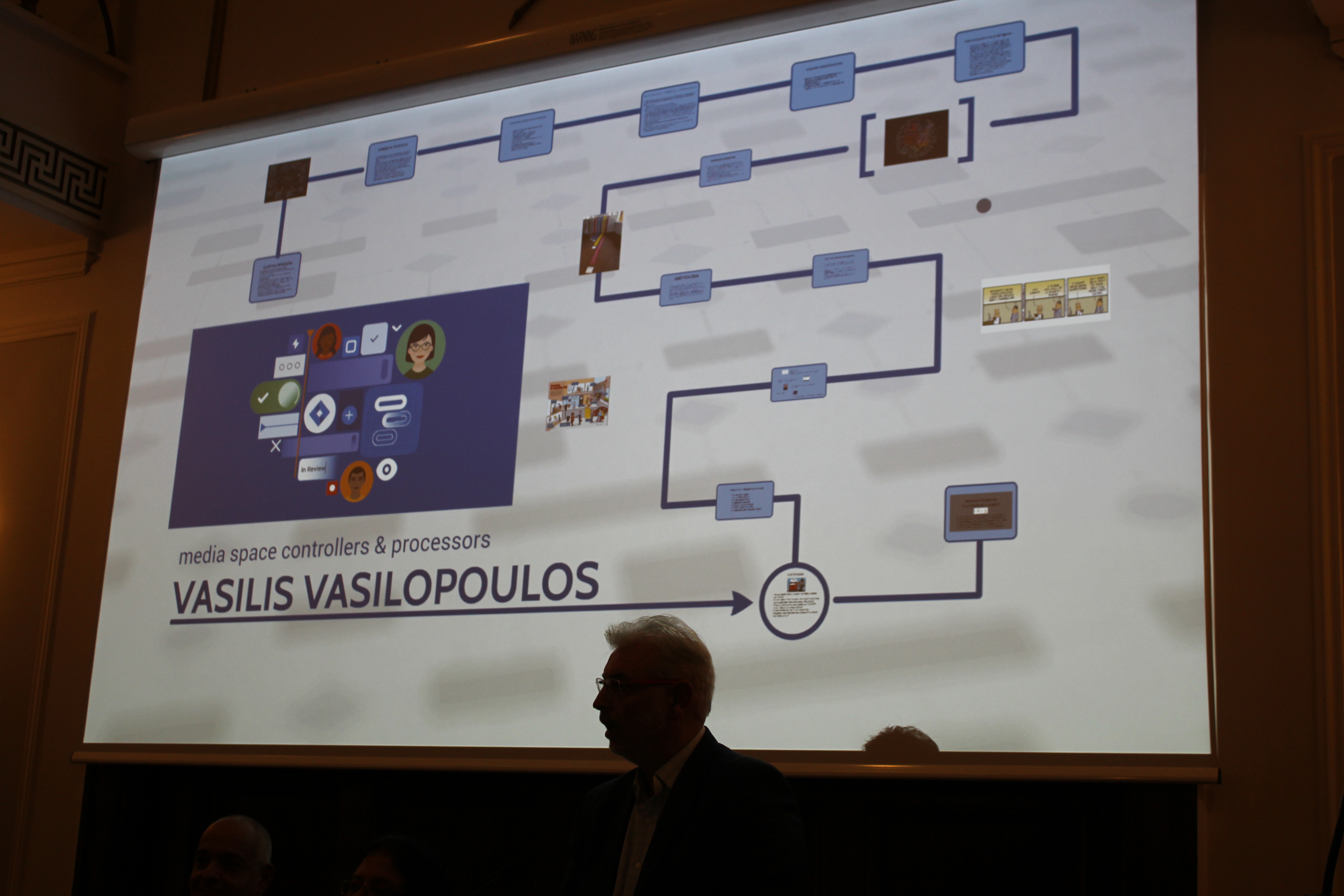

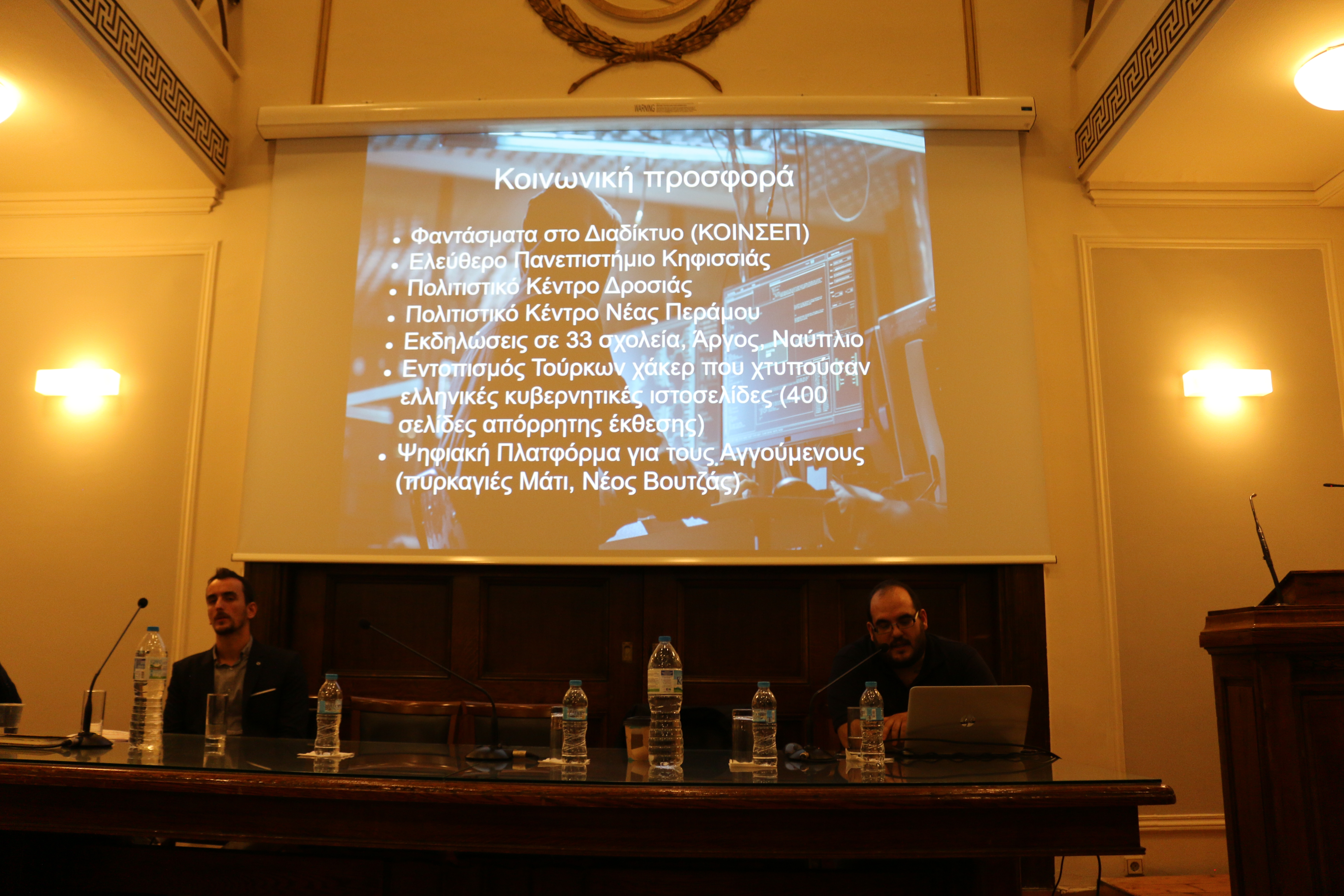

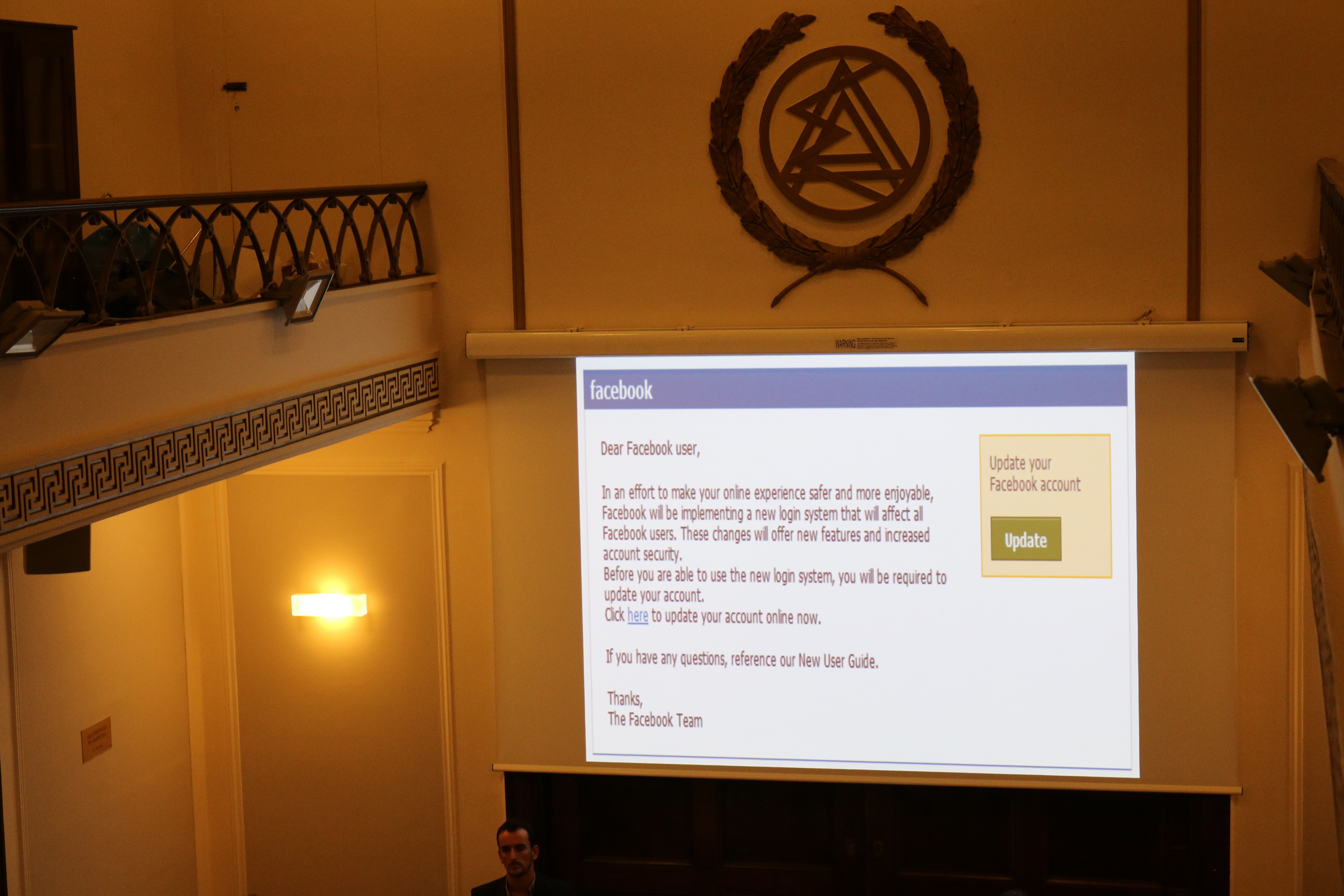

The first seminar organized by Homo Digitalis, entitled “European Cyber Security Month: Real Challenges-Legal and Technical Solutions”, was successfully completed. More than 150 academics, professionals, students and citizens participated.

With the predominant message of the need for better information on the legal and technical aspects of cybersecurity, the seminar took place on Wednesday, October 31, at the Athens Bar Association.

In the framework of the European Cybersecurity Month, the workshop entitled “Real Challenges-Legal and Technical Solutions” brought together more than 150 academics, professionals and students from both legal and computer science backgrounds as well as several citizens with a keen interest in this particular issue. Particularly important was the presence of representatives from the Ministry of Digital Policy, the Data Protection Authority, the Authority for the Confidentiality of Communications, the Electronic Crime Prosecution Division and the judiciary.

In the first session of the seminar, the legal challenges and obligations of cyberspace were analyzed, while the second included a demonstration of social engineering and the presentation of the national cyber security team. The lecturers of the conference were prominent lawyers and computer professionals and demonstrated the necessary collaboration between the two fields to achieve fuller user protection.

We want to thank by heart the Athens Bar Association for its hospitality and support, as well as our outstanding speakers and collaborators, without which this event could not take place.

We are particularly grateful to the Attorney General of the Supreme Court, the Director of the Electronic Crime Investigation Department, the Data Protection Authority, the Ministry of Digital Policy and all the organizations that honored us through their presence.

We gratefully thank all of you who attended the seminar and showed us that the interest in digital rights is constantly increasing in our country.

You can see photos from the seminar here:

Homo Digitalis on the 'Anamenomena kai Mi' (Expected and Unexpected) show (First Program)

Stefanos Vitoratos, one of the co-founders of Homo Digitalis, was a guest on the radio show ‘Anamenomena kai Mi’ on First Program of ERT, on 91.6 and 105.8, on October 29, 2018

Seminar: “European Cybersecurity Month: Real Challenges-Legal and Technical Solutions”

In the context of the European Cyber Security Month campaign under the auspices of the European Network and Information Security Agency (ENISA) and the European Commission, Homo Digitalis organizes a conference entitled “European Cyber Security Month: Real Challenges – Legal and Technical Solutions “.

As the use of the Internet and IT is constantly increasing, the creation of a secure infrastructure and service environment is particularly important. However, unilateral legal compliance, despite the important legislative initiatives of the European Union, does not completely shield the organizations against cyber attacks. The concept of cyber security has been drastically enhanced since it first developed and a thorough risk assessment is the first important step towards the security of network and information systems. Through the interdisciplinary approach of the workshop, the aim is to highlight the need for the synergy of legal and technical tools to achieve the utmost protection of users.

The seminar will be held on October 31, 2018, at 18:00, at the Athens Bar Association’s ceremonial hall (60 Akadimias Street, 106 79 Athens). It has been announced on the official website of the European Cyber Security Month campaign under the auspices of the European Network and Information Security Agency (ENISA) and the European Commission.

The event is open to the public and admission is free of charge. It concerns all public and private organizations, academic institutions, lawyers and professionals in the computer industry as well as all citizens interested in enhancing cyber security and its respective legal and technical extensions.

The eminent speakers who honour us with their presence are distinguished professionals in their field (lawyers, judges, computer engineers) with years of experience and inherent interest in cyber security challenges and solutions.

Homo Digitalis signs EPIC's Universal Guidelines for Artificial Intelligence

Today «Electronic Privacy Information Center» (EPIC) published the Universal Guidelines for Artificial Intelligence, in Brussels at the Public Voice symposium “AI, Ethics, and Fundamental Rights.” The symposium is part of the 40th International Conference of Data Protection and Privacy Commissioners (ICDPPC 2018).

The Universal Guidelines set out 12 principles to “inform and improve the design and use of AI. The Guidelines are intended to maximize the benefits of AI, to minimize the risk, and to ensure the protection of human rights.

Homo Digitalis is one of the organizations and experts around the world that has signed and endorsed EPIC’s Universal Guidelines for Artificial Intelligence.

You can access the full document and the list of endorsements here.

You can access the related press release here.

Homo Digitalis submits a report to the Greek Parliament for the negotiations concerning ePrivacy

On 23/10/18 in the context of the negotiations for the reform of the established legislature (Directive 2002/58/EC) with regard to the processing of personal data and the protection of privacy in digital communications, Homo Digitalis submitted a report to the President and the Vice-Presidents of the Greek Parliament, posing specific questions to the responsible Minister.

The questions as they were submitted in the report:

- Given the growing importance of the principles of protection of privacy already from the design and the protection of private life from scratch for the security of the integrity and credibility of digital communication, the Greek Government supports the adaptation of these principles in the text of the proposed European regulation for the protection of private life in digital communications?

- Given the important decisions taken by the Court of the European Union concerning the retention of data produced or being subjected to processing in light of the provision of available ones to the public of the services of digital communication —(ECJ, Joint cases C-293/12 and C-594/12, Digital Rights and others, 8 April 2014, and ECJ, Joint cases C-203/15 and C-698/15, Tele2 Sverige AB v. Post- och telestyrelsen and UK Home Office v. Tom Watson and others, 21 December 2016)—-, the Greek Government vouches to oppose in any kind of reform of the text of the proposed European Regulation, which will diverge from these decisions?

- Given the need of a common legal framework, which will regulate with clarity the private data processing and the protection of privacy in digital communications, the Greek Government believes that the creation of 2 different and diverge legal frameworks for communications, one that will regulate the communications having an impartation role and a second which will regulate communications stored inside the companies providing digital communication services, consists a correct approach of the legal issues that may derive?

- Finally, given the need of the creation of a special legal system which will empower the provisions of the GDPR and will provide with enhanced protection concerning the private data processing and the protection of privacy in digital communications, does the Greek Government guarantee for the fast finalization of the negotiable text of the European Regulation for the protection of privacy in digital communications?

Homo Digitalis encourages the Greek Parliament members to adopt this Report.

At the same day Homo Digitalis submitted a letter to the Minister of Justice, Mr. M. Kalogirou.

You can see the full content of the Report in Greek HERE.

Can machines replace judges?

A philosophical approach by Philippos Kourakis*

There are various ways in which technology could change the way people who are involved in the legislative process and law enforcement work. In this text we will focus on the question of machines taking over the judiciary, and if that could be in line with Ronald Dworkin’s right solution thesis.

Using a specific algorithm

Lawyers Casey and Niblett [1] describe a hypothetical future situation in which the information and predictions we can derive from technology will be of such precision where we can assign the judge’s role to machines. The process, as they say, will be the following: in some US states, an algorithm is already being used by judges to predict the possibility that the accused will not appear before the court. Although this algorithm has not replaced the judges, it is reasonable to assume that the more effective it will be, the more the judges will rely on it, until they ultimately depend entirely on it.

Τhere is a question through this (hypothetical) scenario on how such a move would be in harmony with the very nature of law. To give an answer, we will turn to Dworkin’s work and in particular to his theory regarding the right solution thesis.

The theory of the right solution and its possible misinterpretation

Dworkin in his early career has shaken the philosophical and rigorous currents of his time, arguing that always, even in the most controversial and difficult cases, there is a right solution [2]. At first, this position seems to be largely expressed by those who support the replacement of judges by machines if the right solution seems reasonable to emerge from a mechanistic process of the highest precision. However, this approach is a misinterpretation of Dworkin’s position.

Dworkin himself had predicted such a misinterpretation. In the Empire of the Law (1986), he wrote [3]:

“I have never designed an algorithm to be used in the courtroom. No computer wizard could draw from my arguments a program which, after gathering all the facts of the case and all the texts of previous laws and judgments, would give us a verdict that would find everyone in agreement.”

Dworkin’s statement stems from his belief that the correct method of hearing cases is an exercise that is fundamentally interpretative and worthwhile and, as such, is based on principles. The judge can find the right solution in each case, but only by finding the best possible interpretation.

The best interpretation is expressed by those who, according to the letter of the law, can legitimately justify the coercion imposed by the law on its companions. In this process, Dworkin argues that the judge tries to preserve the integrity of the law by interpreting it in its best light, having in mind that the law is the creation of a community in which the unifying element is the attempt to justify state coercion.

Dworkin believed that each case had a right solution, but nevertheless, every case is difficult, and finding a solution is a very important exercise of political ethics. Therefore, despite the formalist texture of the philosopher’s belief in a correct solution to each case, he realized that the legal system, being an organic unit, is constantly changing with its individual elements being as constant as possible between them.

Will technology replace judges?

The question that arises from the above is whether the pace of technology development and the path it has taken will lead to machines effectively replacing judges, finding the right answer even in difficult cases. Machine Learning can indeed redirect a set of rules so that a more general goal can be served, which is something that may well be ethically welcomed. From this perspective, Machine Learning is dynamic and structured with continuity. Therefore, if it was used to deal with real assumptions, it would do so with some kind of integrity that would be mechanical in its nature.

Nonetheless, the desired goals would remain intact. The static nature of political ethics, on which the legal system would be based, would detract from legality, in Dworkin’s view. For the philosopher, integrity has the meaning that all parts of the legal system can be revised, since the argumentative disagreement reaches the foundations of legality by looking at basic questions such as how citizens should be taxed and whether they should be taxed or if there should be policies of positive discrimination [4]. Following this reasoning, legislative policies are based on principles that arise through the interpretation of difficult cases. This process aims to consolidate past decisions in a way that would justify state coercion on the part of the interpretive community.

The conclusion

To sum up, it is understandable that the prospect of technology through Machine Learning could hardly be in harmony with legality as expressed by Dworkin. Machine Learning does not work on principles. It operates on statistical relationships that do not reflect ethical principles. Its operation would therefore be abolished to the extent that a system (the legal) would require it to act fundamentally morally.

*Philippos Kourakis is a lawyer with a specialization in Philosophy of Law and Criminology. He holds a Bachelor from the Law School of Athens and a Master from Oxford University in Criminology as well as a Master in Philosophy of Law from the National Kapodistrian University of Athens.

[1] Casey, Anthony J. and Niblett, Anthony, Self-Driving Laws (June 5, 2016). Available at SSRN: https://ssrn.com/abstract=2804674

[2] Ronald Dworkin,Taking Rights Seriously(London: Duckworth, 1978), chapter 4

[3] Ronald Dworkin,Law’s Empire (Cambridge, MA: Harvard University Press, 1986) p. 412

[4] Ibid, p. 73

Homo Digitalis receives two scholarships for free participation in the most popular conference for the protection of privacy and private data in the world

Our organization has the pleasure and honour to have received two scholarships from the program “Epic Public Voice Scholarships for NGOs” to participate in the 40th “International Conference of Data Protection and Privacy Commissioners” in Brussels (22-26 October).

The scholarships could be obtained only by 20 organizations worldwide and they are provided by EPIC, a worldwide well-respected research centre headquartered in Washington D.C, U.S.A which focuses its activity and attention at the protection of privacy, freedom of expression and the democratic values in the society of information.

The conference is organized by the European Data Protection Supervisor (EDPS) and it is widely respected concerning the issues of privacy and personal data protection.

Taking part in the process we will be able to observe speeches and conversations about various relevant issues and exchange ideas with other digital rights organizations from all over the world, academics, as well as representatives of organizations of the EU and the Council of Europe, with government spokesmen of other countries, members of supervising authorities and company agents.

The schedule of the event can be found here.

Stay tuned!

Homo Digitalis on the 'Epi tis Angelaki' show (ERT3)

On October 11, 2018, Elpida Vamvaka, president of Homo Digitalis, was a guest on the ‘Epi tis Angelaki’ show on ERT3 radio