We successfully presented our actions at InfoRights 2024 in Corfu

Homo Digitalis has the great pleasure to participate in the Third Interdisciplinary Conference Info Rights , organized by the Reading Society of Corfu on 17 & 18 May 2024 entitled “Human Rights in the Information Age: Benchmarks of History, Law, Ethics and Culture”.

Specifically, on Saturday 18 May, our member Christos Zanganas represented Homo Digitalis presenting our important actions in the field of Digital Rights in our three pillars of interest, Awareness, Advocacy and Strategic Legal Actions!

We would like to thank the organizers for the kind invitation!

We took an award at CPDP for the Reclaim Your Face campaign

On Tuesday 21 May at the Computers, Privacy & Data Protection (CPDP) conference in Brussels the first ever “Europe AI Policy Leader Awards” by the Center for AI and Digital Policy (CAIDP) took place!!

There the European Digital Rights (EDRi) network, of which Homo Digitalis is the only member in Greece, received the “AI Policy Leader in Civil Society” award for the Reclaim Your Face campaign which was a leader in setting limits on the use of remote biometric identification in the EU AI Act!!!

It is a great honour for Homo Digitalis to be a co-founding member of this campaign, and this award fills all campaign members from the EDRi network and other allies with enthusiasm for all that we can achieve when we act strategically under a common goal!

Thank you CAIDP for this important recognition!

Homo Digitalis with double representation at Digital World Summit Greece

Homo Digitalis has the great honor and pleasure to participate with double representation at the Digital World Summit Greece, on Tuesday 28 May at the National Hellenic Research Foundation!

Specifically, our Vice President and Partner at Digital Law Experts (DLE), Stefanos Vitoratos, will participate as a moderator in the 1st panel of the conference, on the topic “AI: The intersection between regulation and innovation”, in a fascinating panel discussion featuring Dimitris Gerogiannis (President AI Catalyst), Lillian Mitrou (Professor, Department of Information & Communication Systems Engineering, University of the Aegean), Charalambos Tsekeris (President of the National Bioethics & Technology Ethics Committee), Yannis Mastrogeorgiou (Special Secretary of Long Term Planning, Presidency of the Greek Government) and Fotis Draganidis (Director of Technology at Microsoft Hellas).

Our Director for Human Rights & Artificial Intelligence, Lambrini Gyftokosta, will participate as a speaker in the 2nd panel of the Digital World Summit Greece 2024, on the topic “Cybersecurity, Citizen Security and Artificial Intelligence”! This very interesting panel will be moderated by Konstantinos Anagnostopoulos (Co-founder & Director, Athens Legal Tech), while Natalia Soulia (Senior Associate | Privacy, Data Protection & Cybersecurity, KG Law FIrm), Thomas Dombridis (Head of the General Directorate of Cybersecurity of the Ministry of Digital Governance), Antonis Broumas (Head of the Law Department of the Ministry of Digital Governance), Antonis Broumas (Head of the Law Department of the Ministry of Digital Governance), and the Head of the Law Department of the Ministry of Justice of the Republic of Greece) will also be present as speakers.

You can register and read more about the conference and its programme here.

Digital Services Act: Striking a Balance Between Online Safety and Free Expression

By Anastasios Arampatzis

The European Union’s Digital Services Act (DSA) stands as a landmark effort to bring greater order to the often chaotic realm of the internet. This sweeping legislation aims to establish clear rules and responsibilities for online platforms, addressing a range of concerns from consumer protection to combatting harmful content. Yet, within the DSA’s well-intentioned provisions lies a fundamental tension that continues to challenge democracies worldwide: how do we ensure a safer, more civil online environment without infringing on the essential liberties of free expression?

This blog delves into the complexities surrounding the DSA’s provisions on chat and content control. We’ll explore how the fight against online harms, including the spread of misinformation and deepfakes, must be carefully weighed against the dangers of censorship and the chilling of legitimate speech. It’s a balancing act with far-reaching consequences for the future of our digital society.

Online Harms and the DSA’s Response

The digital realm, for all its promise of connection and knowledge, has become a breeding ground for a wide range of online harms. Misinformation and disinformation campaigns erode trust and sow division, while hate speech fuels discrimination and violence against marginalized groups. Cyberbullying shatters lives, particularly those of vulnerable young people. The DSA acknowledges these dangers and seeks to address them head-on.

The DSA places new obligations on online platforms, particularly Very Large Online Platforms (VLOPs) with a significant reach. These requirements include:

- Increased transparency: Platforms must explain how their algorithms work and the criteria they use for recommending and moderating content.

- Accountability: Companies will face potential fines and sanctions for failing to properly tackle illegal and harmful content.

- Content moderation: Platforms must outline clear policies for content removal and implement effective, user-friendly systems for reporting problematic content.

The goal of these DSA provisions is to create a more responsible digital ecosystem where harmful content is less likely to flourish and where users have greater tools to protect themselves.

The Censorship Concern

While the DSA’s intentions are admirable, its measures to combat online harms raise legitimate concerns about censorship and the potential suppression of free speech. History is riddled with instances where the fight against harmful content has served as a pretext to silence dissenting voices, critique those in power, or suppress marginalized groups.

Civil society organizations have stressed the need for DSA to include clear safeguards to prevent its well-meaning provisions from becoming tools of censorship. It’s essential to have precise definitions of “illegal” or “harmful” content – those that directly incite violence or break existing laws. Overly broad definitions risk encompassing satire, political dissent, and artistic expression, which are all protected forms of speech.

Suppressing these forms of speech under the guise of safety can have a chilling effect, discouraging creativity, innovation, and the open exchange of ideas vital to a healthy democracy. It’s important to remember that what offends one person might be deeply important to another. The DSA must tread carefully to avoid empowering governments or platforms to unilaterally decide what constitutes acceptable discourse.

Deepfakes and the Fight Against Misinformation

Deepfakes, synthetic media manipulated to misrepresent reality, pose a particularly insidious threat to the integrity of information. Their ability to make it appear as if someone said or did something they never did has the potential to ruin reputations, undermine trust in institutions, and even destabilize political processes.

The DSA rightfully recognizes the danger of deepfakes and places an obligation on platforms to make efforts to combat their harmful use. However, this is a complex area where the line between harmful manipulation and legitimate uses can become blurred. Deepfake technology can also be harnessed for satire, parody, or artistic purposes.

The challenge for the DSA lies in identifying deepfakes created with malicious intent while protecting those generated for legitimate forms of expression. Platforms will likely need to develop a combination of technological detection tools and human review mechanisms to make these distinctions effectively.

The Responsibility of Tech Giants

When it comes to spreading harmful content and the potential for online censorship, a large portion of the responsibility falls squarely on the shoulders of major online platforms. These tech giants play a central role in shaping the content we see and how we interact online.

The DSA directly addresses this immense power by imposing stricter requirements on the largest platforms, those deemed Very Large Online Platforms. These requirements are designed to promote greater accountability and push these platforms to take a more active role in curbing harmful content.

A key element of the DSA is the push for transparency. Platforms will be required to provide detailed explanations of their content moderation practices, including the algorithms used to filter and recommend content. This increased visibility aims to prevent arbitrary or biased decision-making and offers users greater insight into the mechanisms governing their online experiences.

Protecting Free Speech – Where do We Draw the Line?

The protection of free speech is a bedrock principle of any democratic society. It allows for the robust exchange of ideas, challenges to authority, and provides a voice for those on the margins. Yet, as the digital world has evolved, the boundaries of free speech have become increasingly contested.

The DSA represents an honest attempt to navigate this complex terrain, but it’s vital to recognize that there are no easy answers. The line between harmful content and protected forms of expression is often difficult to discern. The DSA’s implementation must include strong safeguards informed by fundamental human rights principles to ensure space for diverse opinions and critique.

In this effort, we should prioritize empowering users. Investing in media literacy education and promoting tools for critical thinking are essential in helping individuals become more discerning consumers of online information.

Conclusion

The Digital Services Act signals an important turning point in regulating the online world. The struggle to balance online safety and freedom of expression is far from over. The DSA provides a strong foundation but needs to be seen as a step in an ongoing process, not a final solution. To ensure a truly open, democratic, and safe internet, we need continuing vigilance, robust debate, and the active participation of both individuals and civil society.

We participate in InfoRights 2024 in Corfu

Homo Digitalis has the great pleasure to participate in the Third Interdisciplinary Conference, organized by the Corfu Reading Society on 17 & 18 May 2024 entitled “Human Rights in the Information Age: Benchmarks of History, Law, Ethics and Culture”.

Our member Christos Zanganas will represent Homo Digitalis at the Conference, and on Saturday 18 at 12:00 he will present Homo Digitalis’ work for the protection of human rights in relation to the information society in Greece!

We would like to thank the organizers for the very honorable invitation!

You can register for free to attend the conference in person or read more about it here.

The focus of the interdisciplinary themes of the conference is human rights in relation to information in terms of history, regulation (law and ethics) and culture. It is co-organised by the Cultural and Historical Heritage Documentation Laboratory of TABM, the IHRC Research Group of TABM, and the Ionian University Library and Information Centre with the collaboration of the UNESCO Chair on Threats to Cultural Heritage and Heritage-related Activities of the Ionian University, as well as the Corfu Bar Association and the Ionian University Museum Collections.

We bring the "Misinformation" and "What The Future Wants" editions of the international exhibition "The Glass Room" to ADAF 2024!

The “Misinformation” and “What The Future Wants” editions of Tactical Tech’s international exhibition “The Glass Room” will be at the Athens Digital Arts Festival 2024 (ADAF2024)!!

In collaboration with Tactical Tech, the exhibition is brought to Greece by Homo Digitalis, Digital Detox Experience and Open Lab Athens, with the Homo Digitalis team and specifically our members Sophia Antonopoulou, Ariana Rapti and Alkmini Gianni, organising the two exhibitions for ADAF 2024!

ADAF 2024 will take place from 16 to 26 May at the Former Santarosa Courts (48 Stadiou & Arsaki). “The Glass Room: Misinformation Edition” will be available to the audience throughout the festival, while “The Glass Room: What the Future Wants Edition” will be presented in the form of a workshop on Saturday 18/5 at 14.00 by our members Sophia Antonopoulou, Ariana Rapti and Alkmini Gianni.

You can buy tickets for ADAF 2024 and read the full festival programme here.

Many thanks to our three members for their time, energy and dedication in organizing these exhibitions, as well as to ADAF for hosting us!

The Glass Room exhibition has travelled to 61 countries around the world, counting 471 award-winning events and more than 350,000 visitors worldwide. In the past, Homo Digitalis, Digital Detox Experience and Open Lab Athens have presented the exhibitions at the National Library of Greece in Athens and at JOIST Innovation Park in Larissa.

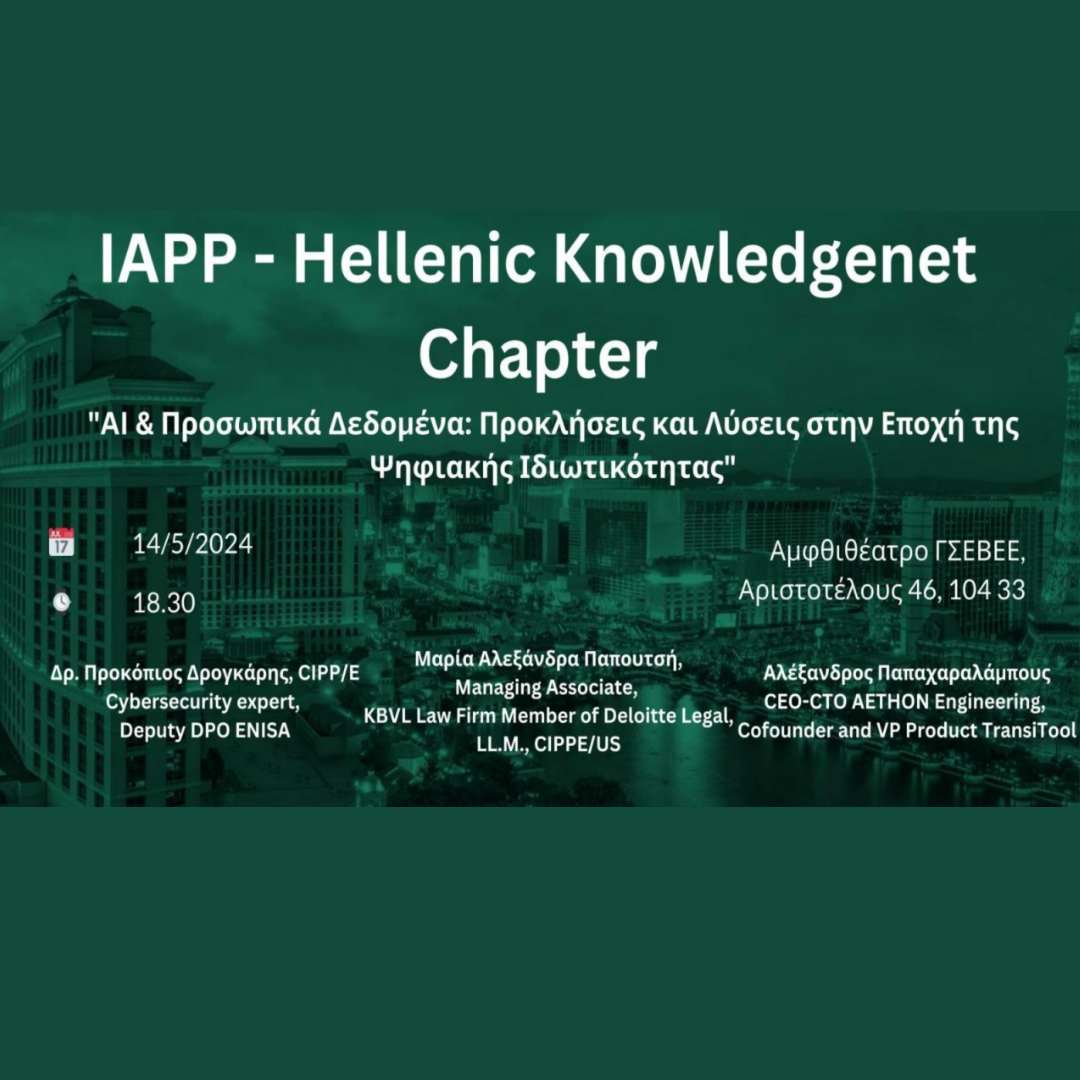

Event of the Greek Chapter of IAPP "AI & Personal Data: Challenges & Solutions in the Age of Digital Privacy"

The Presidency of the Hellenic Chapter of the IAPP-International Association of Privacy Professionals, is organizing on 14 May at 18:30 – 19:00 at FHW GSEBEE, Small Business Institute of GSEBEE an event on “AI & Personal Data: Challenges & Solutions in the Era of Digital Privacy”.

The discussion will be moderated by Stefanos Vitoratos Partner – Digital Law Experts, and Co-Founder and Vice President of Homo Digitalis, while speakers include:

-Dr. Prokopios Drogaris, Cybersecurity Expert, Deputy DPO ENISA, CIPP-E

-Maria Alexandra Papoutsi, Managing Associate, KBVL Law Firm Member of Deloitte Legal, CIPP-E/US

-Alexandros Papacharalambous, CEO-CTO AETHON Engineering, Cofounder & VP Product TransiTool

To register and attend the event in person click here, or to register for an online presentation, click here.

Homo Digitalis is honored that all members of the Bureau of the Hellenic Chapter of the IAPP-International Association of Privacy Professionals are members!

Homo Digitalis participates for the 4th time in CPDP!

Homo Digitalis participates for the 4th time in CPDP, the most recognized international conference for the protection of personal data and privacy!

After our continuous presence there with panel talks and workshops, this year we are honored to be present with a new medium! Namely, we will be interviewed as part of Avatar.fm, a live radio project organized by the social radio station dublab.de and the privacy salon during the CPDP!

Avatar.fm will set the pulse of the conference from 22 to 24 May, providing a platform for organisations with a leading role in data protection and privacy, highlighting their activities in the field of AI and new technologies!

Avatar.fm will be broadcasting live from the iconic Gare Maritime in Brussels, and will also host recorded broadcasts and live DJ Sets! For those of you not there, you can listen to the shows on dublab.de

Homo Digitalis will be represented in this interview by our Director on Artificial Intelligence & Human Rights, Lamprini Gyftokosta.

Stay tuned for the full programme!

We participated in a survey of experts on the use of new technologies in the field of education in the framework of the MILES project

In the age of hyper-connectivity, people are inundated by a constant flow of information and news that brings, on the one hand, better and stable information about world events and, on the other hand, many risks associated with fake news and misinformation.

Homo Digitalis had the pleasure to participate in a relevant research-discussion conducted by the MILES project (MIL and PRE-BUNKING approaches for Critical thinking in the education sector) and addressed to experts regarding the use of new technologies in the field of education.

The aim of the debate is to gather information on misinformation in order to develop effective strategies for the education sector. The discussion focused on issues such as digital literacy, the cultivation of critical thinking as a tool for identifying fake news, the role of education and the education system, and exploring possible policy interventions.

Homo Digitalis was represented in the research debate by the Director of Human Rights & Artificial Intelligence, Lambrini Yftokosta. We would like to thank the civil society organization KMOP Social Action and Innovation Centre for the inclusion and the honorary invitation.